We’ve explained what “Tensor cores” are in a previous blog post, but what you may not know is that Nvidia is working hard at improving how fast these already blistering CPU cores are. The T4 PCIe card was first introduced in 2018, but it’s still a product that many people have never heard of. Yet it can play a crucial part in modern data centers.

What Does the T4 Do?

The T4 is designed to accelerate processing tasks typically used in machine learning applications and other high-performance tasks using tensor math. It can also perform general-purpose GPU computing tasks using CUDA cores. So anything that’s been written to use CUDA on regular GPUs, should work here as well.

What the T4 doesn’t do is connect to a display device and act as a GPU. It doesn’t have any back-panel IO at all and is designed to be installed in low-profile server systems.

The T4s Specs

The T4 card is essentially what you get when you take an Nvidia RTX GPU and remove the GPU features, such as display outputs. What you have left over are the CUDA cores, dedicated Tensor Cores and the ray-tracing acceleration hardware found in RTX GPUs. The T4 is based on the Turing chipset specifically, so it’s tensor and ray-tracing hardware matches those on equivalent RTX cards on a per-core basis.

The T4 in particular has:

2560 CUDA cores.

320 Turing Tensor cores.

16GB of GDDR6 with ECC.

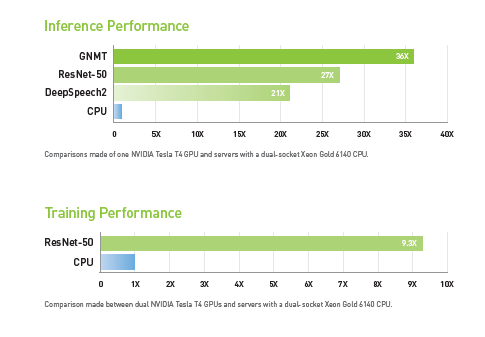

Compared to a regular x86 CPU typically used in servers, the T4 is much, much faster at processing jobs such as training neural nets or drawing inferences from data.

It’s about more than just hardware performance however. The T4 card is passively cooled, low-profile and only draws 70W at its peak. In environments where the energy cost of computation is a major factor, this makes it orders of magnitude cheaper to run machine learning and GPGPU tasks on something like the T4 than a typical server-grade CPU.

Who is the T4 For?

The T4 has a place both in server systems and in workstations. Especially for workstation systems where you need to do machine learning tasks such as creating deep fakes or upscale footage using high-end AI upscaling. The use cases for machine-learning acceleration are growing by the day and adding a dedicated card to handle that while you keep working in the foreground could be a cost-effective way to boost your available processing power.

For server owners in data centers or perhaps just in SME’s or creative groups who need to share processing time, T4 cards and the like offer a way to accelerate offline rendering or machine learning type workloads in a small package. Many servers already have several low-profile PCIe slots to spare, which means that T4s can act as in-place upgrades and free up traditional CPUs for other tasks.

A Niche Card With Wide Applications

While a headless GPU packed with specialized silicon isn’t a component we’d recommend to every customer, the application of machine learning methods and the need to perform tensor math is growing rapidly. The T4 makes a whole lot of sense for a surprisingly large number of consumers.