The latest GPUs from Nvidia have special hardware inside that accelerates ray-traced graphics, allowing them to be rendered in real time. These RT (ray tracing) cores have pushed what’s possible in real time rendering to new heights, but what are RT cores really and how do they work?

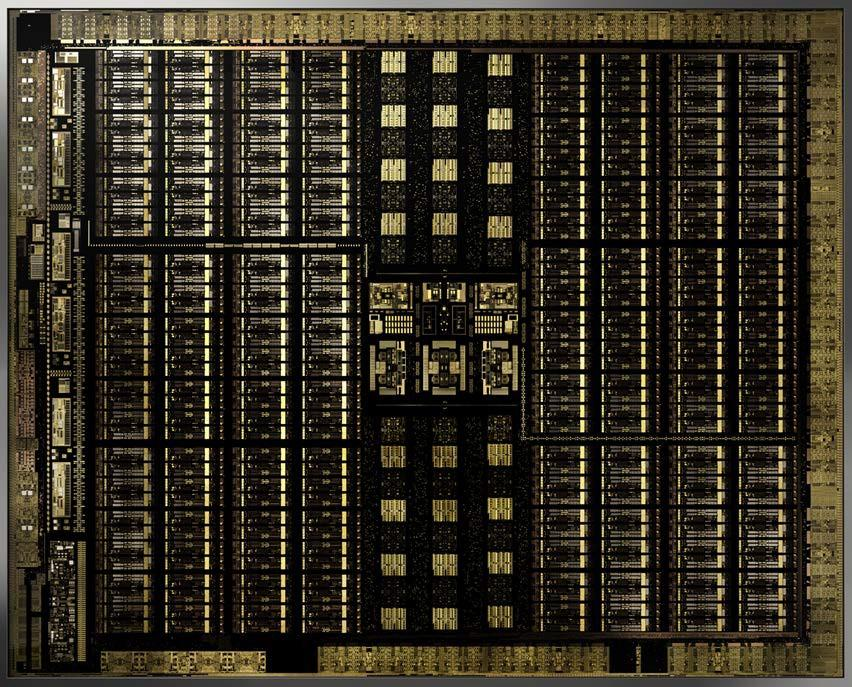

The Parts of an RTX GPU

An Nvidia RTX GPU, which is the product series in question here, has three main types of processor.

The first are its CUDA cores. These are general computation cores. Well, as “general” as modern GPU cores can be. They are small, relatively simple processors. There are thousands of them working in parallel on modern GPUs. These are the processors that work out how to shade each pixel you see on screen and pull off all the other effects you see in modern traditional graphics.

Next, RTX cards have Tensor cores. We’ve written about Tensor cores before, but in short they are built to do a sort of math known as tensor math. These calculations are fundamental to machine learning and artificial intelligence, particularly for neural networks. These cores can be used to accelerate machine learning software in any realm that uses tensor math, but they also play a role in graphics. Nvidia uses them to clean up ray traced images and also to intelligently upsample images rendered at lower resolutions using a technology known as DLSS (Deep Learning Super Sampling).

Finally, we get to the RT cores this post is about. They have the job of doing the math of ray-tracing as quickly as possible. Fast enough to show a moving image on screen at playable frame rates. But hang on a second, what is ray-tracing to begin with?

Ray Tracing in a Nutshell

In real life, what you see is the result of photons of different wavelength hitting the retina of your eye after being focused and gathered there by the lens of your eye.

Before those photons enter your eye, they’ve been bouncing around the world, interacting with all the objects around you. That’s how the scene around you is constructed. By photons bouncing around, interacting with objects, being absorbed or reflected and then coming to rest with you.

3D real-time computer graphics have not been rendered in a way that’s anything like this. Why? Because simulating the way light works is incredibly computationally intensive. Ray tracing has been used extensively for offline rendering. Where one frame may take hours to compute. That’s how they make Hollywood blockbuster animated CG films or visual effects for live action titles.

RT cores specifically accelerate the key math needed to trace virtual rays of light through a scene. Although, with ray tracing the rays are actually fired from the “eye” into the scene. Which is obviously not how we see in real life. Within the simulation however, the result is more or less the same.

RT Cores are ASICs

RT cores are an example of an ASIC or application-specific integrated circuit. You may have heard of ASIC’s in the context of cryptocurrency, with microprocessors designed to only process the cryptography math of one specific crypto coin.

In short, RT cores add extra circuits to the more general purpose CUDA cores that can be included in the rendering pipeline when a ray-tracing calculation comes along.

The CUDA cores hand off that job to the RT cores and then use the resulting answers to the ray-tracing math to render the scene and correctly shade the pixels in front of your eyeballs.

What Do RT Cores Actually Accelerate?

But we can go into a little more detail than that! RT cores aren’t actually doing the full-fat job of ray tracing. Nvidia has found a less computationally intense way of quickly calculating light ray bounces around the scene.

Scene geometry is organized into a data structure known as a BVH (Bounding Volume Hierarchy). It’s a representation in 3D space of how objects in a scene are organized.

The RT cores actually look for ray intersections within this BVH structure. Whether rays intersect according to tests within the BVH influences the value of the relevant pixel shaders.This is a relatively simple test, but the RT cores can do them in massive volume and at incredible speed.

This approach is however fairly low-fidelity and results in a grainy image.Which is where the Tensor cores come in, applying a machine-learning denoiser in real time to clean up the picture.

That’s what those RT cores do explained simply enough so that even we can understand it!